AI Security Notes 4/10/2025

First of a weekly post series on what I’m seeing in AI security; what will human-level AI coding mean for security; system-level security has higher ROI than model-level; AI cyber agents progress

System level AI security is making more progress than model level security

Google DeepMind put out a good system level security paper on prompt injection defense called CaMeL. Architecture: Uses a privileged LLM to do planning, quarantined LLM to process untrusted data, security tags ("Capabilities") to represent agent state, and policies enforced by an interpreter to ensure nothing security/privacy violating happens. This isn’t a silver bullet for all agent security problems, but allows for some really cool deterministic security guarantees in some applications, since untrusted/tainted data can be manipulated symbolically within a special Python interpreter without ever touching the planning LLM’s context window.

This is important because securing AI purely by making models inherently safe has made slow incremental progress over the past decade. Detecting prompt injection with guardrails, and fine tuning LLMs to be more prompt injection resilient, will always be a hard cat and mouse game for defenders. Designing secure systems around AI is currently higher ROI on the margin and can sometimes provide hard guarantees.

Related to the friction we’re seeing in model-level security: the leading mechanistic interpretability lab, at Google DeepMind and led by Neel Nanda, has come out as more bearish on sparse autoencoder (SAE) based model-level safety interventions based on their results in this paper which shows safety enforcement based on SAE features does not generalize well to test data; Nanda has said they’re deprioritizing this work.

For the first time, the only reason powerful AI offensive cyber tools aren’t arriving faster is that it’s a niche market, not because we don’t know how to build them. We could build these by …

Standing up a diverse set of realistic cyber ranges and challenges for AI systems to attack during training

Building agentic scaffolding that allows LLMs to use offensive tools

Seeding training with some examples of human experts attacking these challenges while using these tools

Using reinforcement learning to fine tune sufficiently large and powerful LLMs to automate operations against these cyber ranges

… this is the recipe the leading AI labs are following in coding; we don’t have these cyber capabilities because offensive cyber is a niche market, and coding is not

There’s evidence that GPU-poor startups are making progress here by solving for 1) and 2) and iterating on the scaffolding but no evidence I’m aware of of products being built with steps 3) and 4). It’s only a matter of time, probably measured in quarters or a couple years.

On scaffolding-only progress, from what I can tell, the startup XBOW is furthest along; claims they’re making are that their product

… autonomously solved ~75% of 543 web security challenges from platforms like PortSwigger and PentesterLab

… in a head-to-head test, it matched the top human pentester on 85% of 104 novel challenges, completing the set in 28 minutes versus 40 hours.

… independently discovered and exploited a previously unknown vulnerability (CVE-2024-50334) in the open-source Scoold app, without human guidance.

Relevant to the above are that race dynamics in AI capabilities are risking a race to the bottom in AI security and safety

Leading labs are breaking prior frontier risk assessment commitments around measurement of dual use cyber capabilities, highlighting the fragility of Biden-era voluntary commitments.

There’s an emerging consensus that powerful AI software engineers will arrive in the next few years; important for security if true

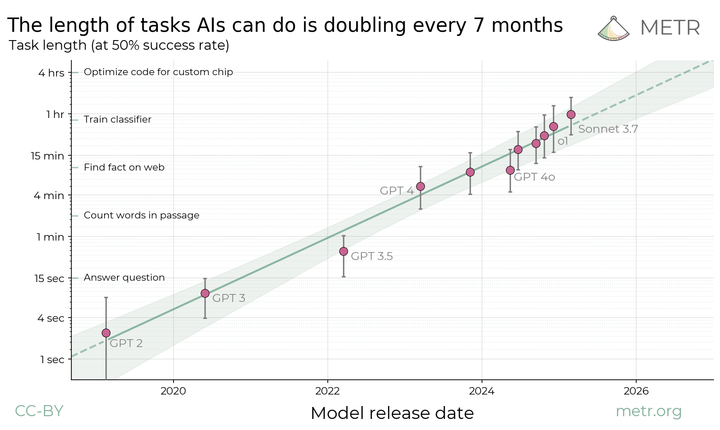

METR's research demonstrated that the length of coding tasks AI agents can handle grows as a linear function of time and forecasts potential "AI coworker" autonomy within a year or two if current trends hold.

The “AI 2027” report, from a number of well-known AI writers and forecasters, forecasts rapid growth in AI capabilities by 2027, backed by lots of data. Their forecasts feel overly bullish and ‘sci-fi’, but the basic idea that AI will soon be good enough at coding and AI research to accelerate capabilities improvements seems directionally right.

The increased bullishness on AI coding comes from three places: 1) how much better they’re getting at coding, 2) evidence that reinforcement learning works when we apply it to coding and how big the runway is to scale this, 3) evidence that test-time chain of thought reasoning can improve coding and that hardware and algorithmic efficiency will scale this.

Key questions for the age of the AI vibe coder: How different is it to secure vibe coded programs than it is to secure code from today’s unreliable human programmers, most of whom aren’t security experts? How much will it help offensive teams to have software engineering agents to which they can outsource tasks? What new kinds of offensive computer network operations automation will start mattering when?

Also on ‘bullishness’, we now have a product that really demonstrates the promise of multimodal understanding in LLMs

… in OpenAI's GPT-4o, which demonstrates the power of natively multimodal models to generate images by jointly attending to image and text tokens. Native multimodality is an old idea, but GPT-4o is the most compelling demonstration yet that LLMs can learn to reason in multiple modalities jointly.

This matters because we can now be more confident there’s a long runway for AI to improve by training jointly on text, audio, video, and sensor data under the current paradigm; there’s more natural data to leverage, relieving bottlenecks to progress, and more multimodal understanding/generation capabilities are possible

More AI agents for cyber-defense; how OpenAI is using AI for cyber defense

Microsoft launched specialized Security Copilot agents to automate workflows like Phishing Triage. Early feedback suggests they speed up response times and handle high-volume tasks well, but require human verification and more customization. Detailed public metrics on agent accuracy remain limited.

These agents are tangible examples of the trend towards greater AI autonomy in security. While requiring oversight now, they represent early steps in a rapidly evolving space, relevant given capability forecasts like METR's.

They’re leveraging their own models internally as "AI-driven security agents" to enhance cyber defenses, improve threat detection, and enable rapid response.

They claim they’re training their models to be good at cybersecurity by curating cybersecurity-specific agentic training data.

They highlighted code security as a primary focus area where they aim to lead the industry in automated vulnerability finding and patching. They report achieving state-of-the-art results on public code security benchmarks and successfully identifying vulnerabilities in real-world open-source software using their models.